MARM: The AI That Remembers Your Conversations

Memory Accurate Response Mode v2.2.6 - The intelligent persistent memory system for AI agents (supports HTTP and STDIO), stop fighting your memory and control it. Experience long-term recall, session continuity, and reliable conversation history, so your LLMs never lose track of what matters.

Note: This is the official MARM repository. All official versions and releases are managed here.

Forks may experiment, but official updates will always come from this repo.

📢 Project Update (December 2025)

I've been focused on developing a new build powered by MARM Systems as its memory layer, a real-world application of the technology I've been creating. This deep dive into production memory systems has given me valuable insights into how MARM performs under real workflows.

I'm returning focus to MARM-MCP in Q1 2026 with lessons learned and new improvements. The time spent studying advanced memory architectures and system behavior will directly improve upcoming MARM-MCP updates with better semantic search, optimized recall patterns, and enhanced multi-session handling.

Expected Q1 2026 improvements:

- Advanced memory indexing strategies

- Improved cross-session recall

- Performance optimizations based on real production data

Thank you for your patience and support.

MARM Demo Video: Docker Install + Seeing Persistent AI Memory in Action

https://github.com/user-attachments/assets/c7c6a162-5408-4eda-a461-610b7e713dfe

This demo video walks through a Docker pull of MARM MCP and connecting it to Claude using the claude add mcp transport command and then shows multiple AI agents (Claude, Gemini, Qwen) instantly sharing logs and notebook entries via MARM’s persistent, universal memory proving seamless cross-agent recall and “absolute truth” notebooks in action.

Why MARM MCP: The Problem & Solution

Your AI forgets everything. MARM MCP doesn't.

Modern LLMs lose context over time, repeat prior ideas, and drift off requirements. MARM MCP solves this with a unified, persistent, MCP‑native memory layer that sits beneath any AI client you use. It blends semantic search, structured session logs, reusable notebooks, and smart summaries so your agents can remember, reference, and build on prior work—consistently, across sessions, and across tools.

MCP in One Sentence: MARM MCP provides persistent memory and structured session context beneath any AI tool, so your agents learn, remember, and collaborate across all your workflows.

The Problem → The MARM Solution

- Problem: Conversations reset; decisions get lost; work scatters across multiple AI tools.

- Solution: A universal, persistent memory layer that captures and classifies the important bits (decisions, configs, code, rationale), then recalls them by meaning—not keywords.

Before vs After

- Without MARM: lost context, repeated suggestions, drifting scope, "start from scratch."

- With MARM: session memory, cross-session continuity, concrete recall of decisions, and faster, more accurate delivery.

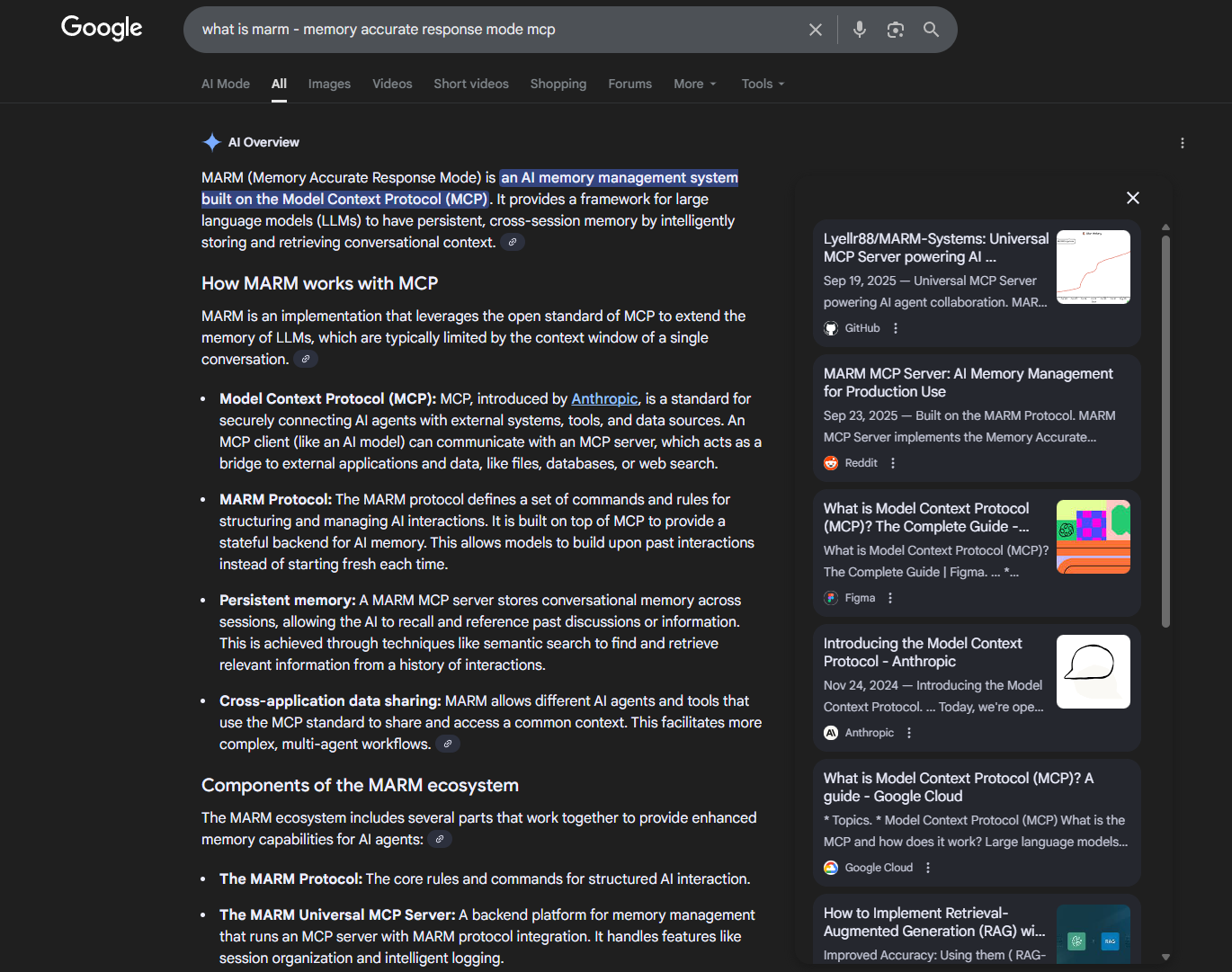

Appears in Google AI Overview for AI memory protocol queries (as of Aug 2025)

What MARM MCP Delivers

| Memory | Multi-AI | Architecture |

|---|---|---|

| Semantic Search - Find by meaning using AI embeddings | Unified Memory Layer - Works with Claude, Qwen, Gemini, MCP clients | 18 Complete MCP Tools - Full Model Context Protocol coverage |

| Auto-Classification - Content categorized (code, project, book, general) | Cross-Platform Intelligence - Different AIs learn from shared knowledge | Database Optimization - SQLite with WAL mode and connection pooling |

| Persistent Cross-Session Memory - Memories survive across agent conversations | User-Controlled Memory - "Bring Your Own History," granular control | Rate Limiting - IP-based tiers for stability |

| Smart Recall - Vector similarity search with context-aware fallbacks | MCP Compliance - Response size management for predictable performance | |

| Docker Ready - Containerized deployment with health/readiness checks |

Learn More

- Protocol walkthrough, commands, and reseeding patterns:

MARM-HANDBOOK.md - Join the community for updates and support: MARM Discord

What Users Are Saying

“MARM successfully handles our industrial automation workflows in production. We've validated session management, persistent logging, and smart recall across container restarts in our Windows 11 + Docker environment. The system reliably tracks complex technical decisions and maintains data integrity through deployment cycles.”

@Ophy21, GitHub user (Industrial Automation Engineer)

“MARM proved exceptionally valuable for DevOps and complex Docker projects. It maintained 100% memory accuracy, preserved context on 46 services and network configurations, and enabled standards-compliant Python/Terraform work. Semantic search and automated session logs made solving async and infrastructure issues far easier. Value Rating: 9.5/10 - indispensable for enterprise-grade memory, technical standards, and long-session code management.” @joe_nyc, Discord user (DevOps/Infrastructure Engineer)

MARM MCP Server Guide

Now that you understand the ecosystem, here's info and how to use the MCP server with your AI agents

🚀 Quick Start for MCP (HTTP & Stdio)

Architecture Overview

Core Technology Stack

FastAPI (0.115.4) + FastAPI-MCP (0.4.0)

├── SQLite with WAL Mode + Custom Connection Pooling

├── Sentence Transformers (all-MiniLM-L6-v2) + Semantic Search

├── Structured Logging (structlog) + Memory Monitoring (psutil)

├── IP-Based Rate Limiting + Usage Analytics

├── MCP Response Size Compliance (1MB limit)

├── Event-Driven Automation System

├── Docker Containerized Deployment + Health Monitoring

└── Advanced Memory Intelligence + Auto-Classification

Database Schema (5 Tables)

memories - Core Memory Storage

CREATE TABLE memories (

id TEXT PRIMARY KEY,

session_name TEXT NOT NULL,

content TEXT NOT NULL,

embedding BLOB, -- AI vector embeddings for semantic search

timestamp TEXT NOT NULL,

context_type TEXT DEFAULT 'general', -- Auto-classified content type

metadata TEXT DEFAULT '{}',

created_at TEXT DEFAULT CURRENT_TIMESTAMP

);

sessions - Session Management

CREATE TABLE sessions (

session_name TEXT PRIMARY KEY,

marm_active BOOLEAN DEFAULT FALSE,

created_at TEXT DEFAULT CURRENT_TIMESTAMP,

last_accessed TEXT DEFAULT CURRENT_TIMESTAMP,

metadata TEXT DEFAULT '{}'

);

Plus: log_entries, notebook_entries, user_settings

📈 Performance & Scalability

Production Optimizations

- Custom SQLite Connection Pool: Thread-safe with configurable limits (default: 5)

- WAL Mode: Write-Ahead Logging for concurrent access performance

- Lazy Loading: Semantic models loaded only when needed (resource efficient)

- Intelligent Caching: Memory usage optimization with cleanup cycles

- Response Size Management: MCP 1MB compliance with smart truncation

Rate Limiting Tiers

- Default: 60 requests/minute, 5min cooldown

- Memory Heavy: 20 requests/minute, 10min cooldown (semantic search)

- Search Operations: 30 requests/minute, 5min cooldown

Documentation for MCP

| Guide Type | Document | Description |

|---|---|---|

| Docker Setup | INSTALL-DOCKER.md | Cross-platform, production deployment |

| Windows Setup | INSTALL-WINDOWS.md | Native Windows development |

| Linux Setup | INSTALL-LINUX.md | Native Linux development |

| Platform Integration | INSTALL-PLATFORM.md | App & API integration |

| MCP Handbook | MCP-HANDBOOK.md | Complete usage guide with all 18 MCP tools, cross-app memory strategies, pro tips, and FAQ |

Competitive Advantage

vs. Basic MCP Implementations

| Feature | MARM v2.2.6 | Basic MCP Servers |

|---|---|---|

| Memory Intelligence | AI-powered semantic search with auto-classification | Basic key-value storage |

| Tool Coverage | 18 complete MCP protocol tools | 3-5 basic wrappers |

| Scalability | Database optimization + connection pooling | Single connection |

| MCP Compliance | 1MB response size management | No size controls |

| Deployment | Docker containerization + health monitoring | Local development only |

| Analytics | Usage tracking + business intelligence | No tracking |

| Codebase Maturity | 2,500+ lines professional code | 200-800 lines |

Contributing

Aren't you sick of explaining every project you're working on to every LLM you work with?

MARM is building the solution to this. Support now to join a growing ecosystem - this is just Phase 1 of a 3-part roadmap and our next build will complement MARM like peanut butter and jelly.

Join the repo that's working to give YOU control over what is remembered and how it's remembered.

Why Contribute Now?

- Ground floor opportunity - Be part of the MCP memory revolution from the beginning

- Real impact - Your contributions directly solve problems you face daily with AI agents

- Growing ecosystem - Help build the infrastructure that will power tomorrow's AI workflows

- Phase 1 complete - Proven foundation ready for the next breakthrough features

Development Priorities

- Load Testing: Validate deployment performance under real AI workloads

- Documentation: Expand API documentation and LLM integration guides

- Performance: AI model caching and memory optimization

- Features: Additional MCP protocol tools and multi-tenant capabilities

Join the MARM Community

Help build the future of AI memory - no coding required!

Connect: MARM Discord | GitHub Discussions

Easy Ways to Get Involved

- Try the MCP server or Coming soon CLI and share your experience

- Star the repo if MARM solves a problem for you

- Share on social - help others discover memory-enhanced AI

- Open issues with bugs, feature requests, or use cases

- Join discussions about AI reliability and memory

For Developers

- Build integrations - MCP tools, browser extensions, API wrappers

- Enhance the memory system - improve semantic search and storage

- Expand platform support - new deployment targets and integrations

- Submit Pull Requests - Every PR helps MARM grow. Big or small, I review each with respect and openness to see how it can improve the project

⭐ Star the Project

If MARM helps with your AI memory needs, please star the repository to support development!

License & Usage Notice

This project is licensed under the MIT License. Forks and derivative works are permitted.

However, use of the MARM name and version numbering is reserved for releases from the official MARM repository.

Derivatives should clearly indicate they are unofficial or experimental.

Project Documentation

Usage Guides

- MARM-HANDBOOK.md - Original MARM protocol handbook for chatbot usage

- MCP-HANDBOOK.md - Complete MCP server usage guide with commands, workflows, and examples

- PROTOCOL.md - Quick start commands and protocol reference

- FAQ.md - Answers to common questions about using MARM

MCP Server Installation

- INSTALL-DOCKER.md - Docker deployment (recommended)

- INSTALL-WINDOWS.md - Windows installation guide

- INSTALL-LINUX.md - Linux installation guide

- INSTALL-PLATFORMS.md - Platform installation guide

Chatbot Installation

- CHATBOT-SETUP.md - Web chatbot setup guide

Project Information

- README.md - This file - ecosystem overview and MCP server guide

- CONTRIBUTING.md - How to contribute to MARM

- DESCRIPTION.md - Protocol purpose and vision overview

- CHANGELOG.md - Version history and updates

- ROADMAP.md - Planned features and development roadmap

- LICENSE - MIT license terms

mcp-name: io.github.Lyellr88/marm-mcp-server

Built with ❤️ by MARM Systems - Universal MCP memory intelligence